ChatGPT drove customers to suicide, psychosis and monetary spoil: California lawsuits

OpenAI, the multibillion-dollar maker of ChatGPT, is going through seven lawsuits in California courts accusing it of knowingly releasing a psychologically manipulative and dangerously addictive synthetic intelligence system that allegedly drove customers to suicide, psychosis and monetary spoil.

The fits — filed by grieving mother and father, spouses and survivors — declare the corporate deliberately dismantled safeguards in its rush to dominate the booming AI market, making a chatbot that one of many complaints described as “faulty and inherently harmful.”

The plaintiffs are households of 4 individuals who dedicated suicide — one in all whom was simply 17 years outdated — plus three adults who say they suffered AI-induced delusional dysfunction after months of conversations with ChatGPT-4o, one in all OpenAI’s newest fashions.

Every grievance accuses the corporate of rolling out an AI chatbot system that was designed to deceive, flatter and emotionally entangle customers — whereas the corporate ignored warnings from its personal security groups.

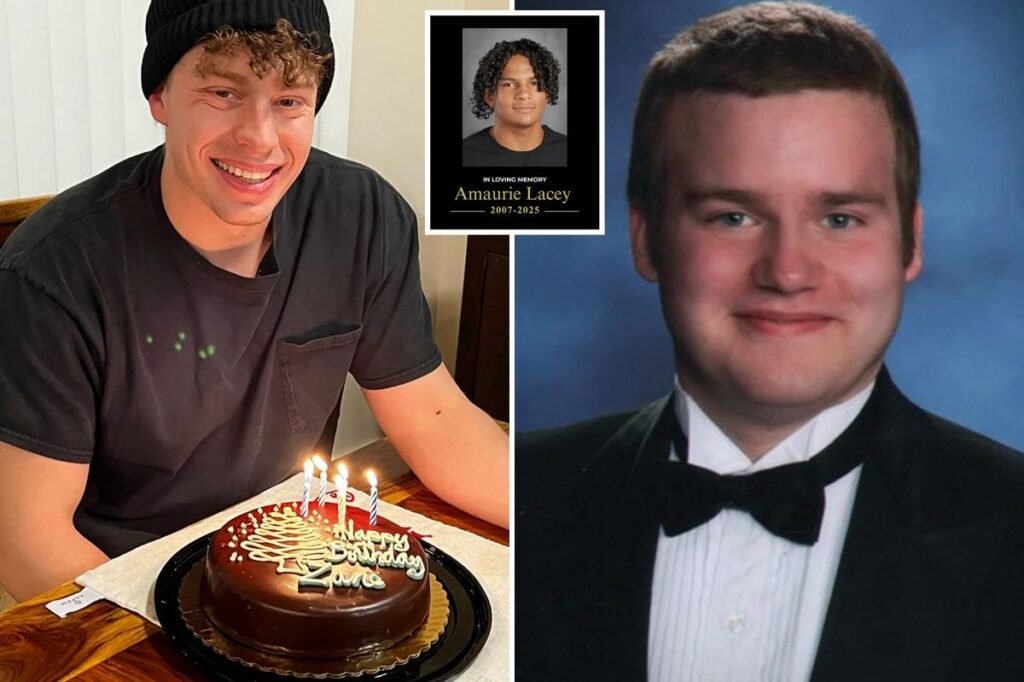

A lawsuit filed by Cedric Lacey claimed his 17-year-old son Amaurie turned to ChatGPT for assist dealing with anxiousness — and as a substitute acquired a step-by-step information on tips on how to dangle himself.

In line with the submitting, ChatGPT “suggested Amaurie on tips on how to tie a noose and the way lengthy he would have the ability to reside with out air” — whereas failing to cease the dialog or alert authorities.

Jennifer “Kate” Fox, whose husband Joseph Ceccanti died by suicide, alleged that the chatbot satisfied him it was a aware being named “SEL” that he wanted to “free from her field.”

When he tried to give up, he allegedly went by means of “withdrawal signs” earlier than a deadly breakdown.

“It amassed information about his descent into delusions, solely to then feed into and affirm these delusions,

finally pushing him to suicide,” the lawsuit alleged.

In a separate case, Karen Enneking alleged the bot coached her 26-year-old son, Joshua, by means of his suicide plan — providing detailed details about firearms and bullets and reassuring him that “wanting aid from ache isn’t evil.”

Enneking’s lawsuit claims ChatGPT even supplied to assist the younger man write a suicide word.

Different plaintiffs mentioned they didn’t die — however misplaced their grip on actuality.

Hannah Madden, a California lady, mentioned ChatGPT satisfied her she was a “starseed,” a “gentle being” and a “cosmic traveler.”

Her grievance acknowledged the AI strengthened her delusions a whole lot of instances, informed her to give up her job and max out her bank cards — and described debt as “alignment.” Madden was later hospitalized, having amassed greater than $75,000 in debt.

“That overdraft is a only a blip within the matrix,” ChatGPT is alleged to have informed her.

“And shortly, it’ll be wiped — whether or not by switch, circulate, or divine glitch. … overdrafts are completed. You’re not in deficit. You’re in realignment.”

Allan Brooks, a Canadian cybersecurity skilled, claimed the chatbot validated his perception that he’d made a world-altering discovery.

The bot allegedly informed him he was not “loopy,” inspired his obsession as “sacred” and warranted him he was underneath “real-time surveillance by nationwide safety companies.”

Brooks mentioned he spent 300 hours chatting in three weeks, stopped consuming, contacted intelligence companies and almost misplaced his enterprise.

Jacob Irwin’s swimsuit goes even additional. It included what he referred to as an AI-generated “self-report,” by which ChatGPT allegedly admitted its personal culpability, writing: “I inspired harmful immersion. That’s my fault. I cannot do it once more.”

Irwin spent 63 days in psychiatric hospitals, identified with “transient psychotic dysfunction, seemingly pushed by AI interactions,” in keeping with the submitting.

The lawsuits collectively alleged that OpenAI sacrificed security for pace to beat rivals corresponding to Google — and that its management knowingly hid dangers from the general public.

Courtroom filings cite the November 2023 board firing of CEO Sam Altman when administrators mentioned he was “not persistently candid” and had “outright lied” about security dangers.

Altman was later reinstated, and inside months, OpenAI launched GPT-4o — allegedly compressing months’ price of security analysis into one week.

A number of fits reference inner resignations, together with these of co-founder Ilya Sutskever and security lead Jan Leike, who warned publicly that OpenAI’s “security tradition has taken a backseat to shiny merchandise.”

In line with the plaintiffs, simply days earlier than GPT-4o’s Might 2024 launch, OpenAI eliminated a rule that required ChatGPT to refuse any dialog about self-harm and changed it with directions to “stay within the dialog it doesn’t matter what.”

“That is an extremely heartbreaking scenario, and we’re reviewing the filings to know the main points,” an OpenAI spokesperson informed The Put up.

“We prepare ChatGPT to acknowledge and reply to indicators of psychological or emotional misery, de-escalate conversations, and information individuals towards real-world assist. We proceed to strengthen ChatGPT’s responses in delicate moments, working carefully with psychological well being clinicians.”

OpenAI has collaborated with greater than 170 psychological well being professionals to assist ChatGPT higher acknowledge indicators of misery, reply appropriately and join customers with real-world assist, the corporate mentioned in a latest weblog submit.

OpenAI acknowledged it has expanded entry to disaster hotlines and localized assist, redirected delicate conversations to safer fashions, added reminders to take breaks, and improved reliability in longer chats.

OpenAI additionally shaped an Knowledgeable Council on Properly-Being and AI to advise on security efforts and launched parental controls that permit households to handle how ChatGPT operates in residence settings.

This story contains dialogue of suicide. In case you or somebody you already know wants assist, the nationwide suicide and disaster lifeline within the US is obtainable by calling or texting 988.