The Obtain: AI-enhanced cybercrime, and safe AI assistants

Simply as software program engineers are utilizing synthetic intelligence to assist write code and test for bugs, hackers are utilizing these instruments to scale back the effort and time required to orchestrate an assault, reducing the limitations for much less skilled attackers to strive one thing out.

Some in Silicon Valley warn that AI is getting ready to having the ability to perform absolutely automated assaults. However most safety researchers as an alternative argue that we must be paying nearer consideration to the way more quick dangers posed by AI, which is already rushing up and rising the quantity of scams.

Criminals are more and more exploiting the newest deepfake applied sciences to impersonate individuals and swindle victims out of huge sums of cash. And we should be prepared for what comes subsequent. Learn the complete story.

—Rhiannon Williams

This story is from the subsequent print problem of MIT Know-how Assessment journal, which is all about crime. In case you haven’t already, subscribe now to obtain future points as soon as they land.

Is a safe AI assistant potential?

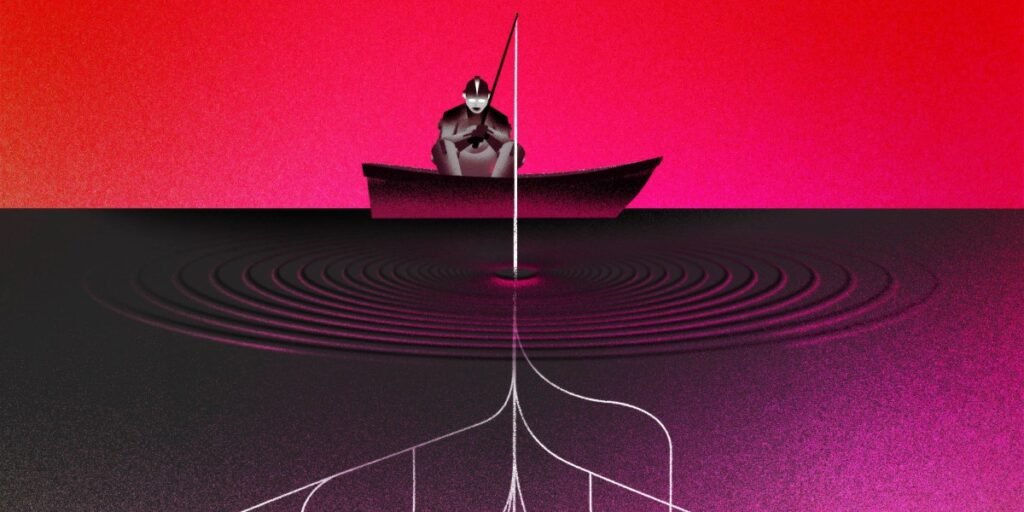

AI brokers are a dangerous enterprise. Even when caught contained in the chatbox window, LLMs will make errors and behave badly. As soon as they’ve instruments that they’ll use to work together with the skin world, resembling internet browsers and e mail addresses, the implications of these errors grow to be much more severe.

Viral AI agent venture OpenClaw, which has made headlines the world over in current weeks, harnesses present LLMs to let customers create their very own bespoke assistants. For some customers, this implies handing over reams of private knowledge, from years of emails to the contents of their arduous drive. That has safety consultants completely freaked out.

In response to those considerations, its creator warned that nontechnical individuals shouldn’t use the software program. However there’s a transparent urge for food for what OpenClaw is providing, and any AI firms hoping to get in on the non-public assistant enterprise might want to work out how one can construct a system that may hold customers’ knowledge secure and safe. To take action, they’ll have to borrow approaches from the reducing fringe of agent safety analysis. Learn the complete story.

—Grace Huckins