Anthropic’s auto-clicking AI Chrome extension raises browser-hijacking issues

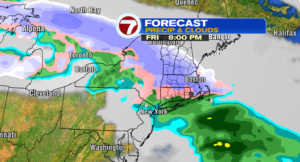

The corporate examined 123 instances representing 29 completely different assault situations and located a 23.6 % assault success charge when browser use operated with out security mitigations.

One instance concerned a malicious e-mail that instructed Claude to delete a person’s emails for “mailbox hygiene” functions. With out safeguards, Claude adopted these directions and deleted the person’s emails with out affirmation.

Anthropic says it has carried out a number of defenses to handle these vulnerabilities. Customers can grant or revoke Claude’s entry to particular web sites by means of site-level permissions. The system requires person affirmation earlier than Claude takes high-risk actions like publishing, buying, or sharing private knowledge. The corporate has additionally blocked Claude from accessing web sites providing monetary providers, grownup content material, and pirated content material by default.

These security measures lowered the assault success charge from 23.6 % to 11.2 % in autonomous mode. On a specialised check of 4 browser-specific assault sorts, the brand new mitigations reportedly lowered the success charge from 35.7 % to 0 %.

Unbiased AI researcher Simon Willison, who has extensively written about AI safety dangers and coined the time period “immediate injection” in 2022, known as the remaining 11.2 % assault charge “catastrophic,” writing on his weblog that “within the absence of 100% dependable safety I’ve hassle imagining a world through which it is a good suggestion to unleash this sample.”

By “sample,” Willison is referring to the latest development of integrating AI brokers into internet browsers. “I strongly count on that all the idea of an agentic browser extension is fatally flawed and can’t be constructed safely,” he wrote in an earlier publish on related prompt-injection safety points not too long ago present in Perplexity Comet.

The safety dangers are not theoretical. Final week, Courageous’s safety staff found that Perplexity’s Comet browser may very well be tricked into accessing customers’ Gmail accounts and triggering password restoration flows by means of malicious directions hidden in Reddit posts. When customers requested Comet to summarize a Reddit thread, attackers may embed invisible instructions that instructed the AI to open Gmail in one other tab, extract the person’s e-mail handle, and carry out unauthorized actions. Though Perplexity tried to repair the vulnerability, Courageous later confirmed that its mitigations had been defeated and the safety gap remained.

For now, Anthropic plans to make use of its new analysis preview to establish and handle assault patterns that emerge in real-world utilization earlier than making the Chrome extension extra broadly obtainable. Within the absence of fine protections from AI distributors, the burden of safety falls on the person, who’s taking a big threat through the use of these instruments on the open internet. As Willison famous in his publish about Claude for Chrome, “I do not suppose it is affordable to count on finish customers to make good selections concerning the safety dangers.”