Meta AI’s Llama language mannequin modded to run on decades-old Xbox 360

A scorching potato: The open-source venture llama2.c is designed to run a light-weight model of the Llama 2 mannequin fully in C code. This “child” Llama 2 mannequin is impressed by llama.cpp, a venture created to allow LLM inference throughout a variety of {hardware}, from native units to cloud-based platforms. These compact code experiments at the moment are being leveraged to run AI know-how on nearly any gadget with a chip, highlighting the rising accessibility and flexibility of AI instruments.

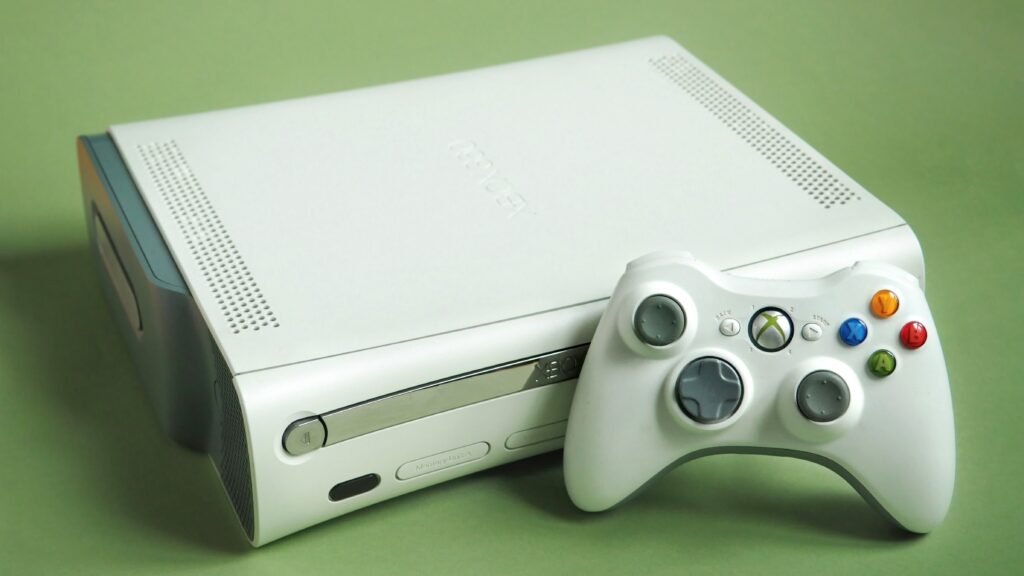

After seeing Exo Labs run a big language mannequin on an historical Pentium II working Home windows 98, developer Andrei David determined to tackle an much more unconventional problem. Dusting off his Xbox 360 console, he got down to pressure the almost two-decade-old machine to load an AI mannequin from Meta AI’s Llama household of LLMs.

David shared on X that he efficiently ported llama2.c to Microsoft’s 2005-era gaming console. Nonetheless, the method wasn’t with out vital hurdles. The Xbox 360’s PowerPC CPU is a big-endian structure, which required in depth endianness conversion for each the mannequin’s configuration and weights. Moreover, he needed to take care of substantial changes and optimizations to the unique code to make it work on the growing older {hardware}.

LLM working on Xbox 360

With Xenon CPU (3.2GHz PowerPC w/ 3 cores) and 512MB unified RAM. Based mostly on @karpathy‘s🩷llama2.c, ported to run on Microsoft’s highly effective console from 2005. Pure C implementation optimized for PowerPC structure and Xbox reminiscence administration.

Impressed by… pic.twitter.com/e9oMLaWIyi

– Andrei David (@AndreiDavid) January 10, 2025

Reminiscence administration posed yet one more vital problem. The 60MB llama2 mannequin needed to be rigorously structured to suit inside the Xbox 360’s unified reminiscence structure, the place the CPU and GPU share the identical pool of RAM. Based on David, the Xbox 360’s reminiscence structure was remarkably forward-thinking for its time, foreshadowing the reminiscence administration strategies now normal in fashionable gaming consoles and APUs.

After in depth coding and optimization, David efficiently ran llama2 on his Xbox 360 utilizing a easy immediate: “Sleep Joe mentioned.” Regardless of the llama2 mannequin being simply 700 traces of C code with no exterior dependencies, David famous that it may ship “surprisingly” robust efficiency when tailor-made to a sufficiently slender area.

David defined that working inside the constraints of a restricted platform just like the Xbox 360 forces you to prioritize environment friendly reminiscence utilization above all else. In response, one other X consumer instructed that the 512MB of reminiscence on Microsoft’s outdated console may be enough to run different small LLM implementations, equivalent to smolLM, created by AI startup Hugging Face.

The developer gladly accepted the problem, so we’ll probably see further LLM experiments on Xbox 360 within the not-so-distant future.